paxos

Plain Paxos Implementations in Python & Java

I've read Lamport's paper on Paxos. I've also heard that it isn't used much in practice, for reasons of performance. What algorithms are commonly used for consensus in distributed systems?

Source: (StackOverflow)

Dynamo-like databases (e.g. Cassandra) can enforce consistency by means of quorum, i.e. a number of synchronously written replicas (W) and a number of replicas to read (R) should be chosen in such a way that W+R>N where N is a replication factor. On the other hand, PAXOS-based systems like Zookeeper are also used as a consistent fault-tolerant storage.

What is the difference between these two approaches? Does PAXOS provide guarantees that are not provided by W+R>N schema?

Source: (StackOverflow)

Could someone give me a list of real use cases of Paxos. That is real problems that require consensus as part of a bigger problem.

Is the following a use case of Paxos?

Suppose there are two clients playing poker against each other on a poker server. The poker server is replicated. My understanding of Paxos is that it could be used to maintain consistency of the inmemory data structures that represent the current hand of poker. That is, ensure that all replicas have the exact same inmemory state of the hand.

But why is Paxos necessary? Suppose a new card needs to be dealt. Each replica running the same code will generate the same card if everything went correct. Why can't the clients just request the latest state from all the replicated servers and choose the card that appears the most. So if one server had an error the client will still get the correct state from just choosing the majority.

Source: (StackOverflow)

I'm new to distributed systems, and I'm reading about "simple Paxos". It creates a lot of chatter and I'm thinking about performance implications.

Let's say you're building a globally-distributed database, with several small-ish clusters located in different locations. It seems important to minimize the amount of cross-site communication.

What are the decisions you definitely need to use consensus for? The only one I thought of for sure was deciding whether to add or remove a node (or set of nodes?) from the network. It seems like this is necessary for vector clocks to work. Another I was less sure about was deciding on an ordering for writes to the same location, but should this be done by a leader which is elected via Paxos?

It would be nice to avoid having all nodes in the system making decisions together. Could a few nodes at each local cluster participate in cross-cluster decisions, and all local nodes communicate using a local Paxos to determine local answers to cross-site questions? The latency would be the same assuming the network is not saturated, but the cross-site network traffic would be much lighter.

Let's say you can split your database's tables along rows, and assign each subset of rows to a subset of nodes. Is it normal to elect a set of nodes to contain each subset of the data using Paxos across all machines in the system, and then only run Paxos between those nodes for all operations dealing with that subset of data?

And a catch-all: are there any other design-related or algorithmic optimizations people are doing to address this?

Source: (StackOverflow)

Can someone explain me simply the main differences between Operational Transform and CRDT?

As far as I understand, both are algorithms that permits data to converge without conflict on different nodes of a distributed system.

In which usecase would you use which algorithm?

As far as I understand, OT is mostly used for text and CRDT is more general and can handle more advanced structures right?

Is CRDT more powerful than OT?

I ask this question because I am trying to see how to implement a collaborative editor for HTML documents, and not sure in which direction to look first. I saw the ShareJS project, and their attempts to support rich text collaboration on the browser on contenteditables elements. Nowhere in ShareJS I see any attempt to use CRDT for that.

We also know that Google Docs is using OT and it's working pretty well for real-time edition of rich documents.

Is Google's choice of using OT because CRDT was not very known at that time? Or would it be a good choice today too?

I'm also interested to hear about other use cases too, like using these algorithms on databases. Riak seems to use CRDT. Can OT be used to sync nodes of a database too and be an alternative to Paxos/Zab/Raft?

Source: (StackOverflow)

Can someone give me a real-world example of how Paxos algorithm is used in a distributed database ? I have read many papers on Paxos that explain the algorithm but none of them really explain with an actual example.

A simple example could be a banking application where a account is being modified through multiple sessions (i.e. a deposit at a teller, a debit operation etc..). Is Paxos used to decide which operation happens first ? Also, what does one mean by multiple instances of Paxos protocol ? How is when is this used ? Basically, I am trying to understand all this through a concrete example rather than abstract terms.

Source: (StackOverflow)

Paxos algorithm can tolerate up to F failures when using 2F + 1 processors. As far as I understand, this algorithm works only with fixed number of processors. Is it possible to use this algorithm in dynamic environment, where nodes can be added and removed dynamicaly?

Source: (StackOverflow)

I am implementing Paxos in a cluster simulator application, using the documentation available in Wikipedia. Unfortunately, it leaves several doors open to interpretation and does not provide much information on key implementation issues. It is unclear and incomplete.

- Assuming a cluster divided in 3 regions, each containing 3 nodes (total = 9 nodes). What happens if communication is broken between regions? There is no way any leader can reach quorum (which is 5).

Isn't Paxos going to enter an infinite loop? I guess one should not initiate Paxos if one cannot communicate with at least a quorum of nodes.

- In Phase 1b: 'If the proposal number N is larger than any previous proposal, then each Acceptor promises not to accept proposals less than N, and sends the value it last accepted for this instance to the Proposer'.

What is 'the last value it accepted'? Is it any previous proposal number from the proposer?

What does 'instance' refer to exactly in this case?

In Phase 1a: Does one include the value to agree on with the Prepare message or is this deferred to the Accept! message? Or it does matter?

In Phase 2a: 'If any of the Acceptors have already accepted a value, the leader must Choose a value with the maximum proposal number N'.

What is value here? Is it the proposal number? I believe not, but this phrase is unclear.

In Phase 2a: 'Otherwise, the Proposer is free to choose any value'. What does this mean? A value for what? For the proposal number?

Paxos seems to rely on an increasing value of N (proposal number) to work? Is this correct?

The wikipedia entry does not discuss the initial values a node should set before starting to participate in Paxos. What are these?

P.S.: I don't have enough reputation to create a 'Paxos' tag (any volunteer?)

Source: (StackOverflow)

I read how current master election algorithms like Raft, Paxos or Zab elect master on a cluster and couldn't understand why they use sophisticated algorithms instead of simple bully algorithm.

I'm developing a cluster library and use UDP Multicast for heartbeat messages. Each node joins a multicast address and also send datagram packets periodically to that address. If the nodes find out there is a new node that sends packets to this multicast address, the node is simply added to cluster and similarly when the nodes in the cluster don't get any package from a node, they remove it from the cluster. When I need to choose a master node, I simply iterate over the nodes in the cluster and choose the oldest one.

I read some articles that implies this approach is not effective and more sophisticated algorithms like Paxos should be used in order to elect a master or detect failures via heartbeat messages. I couldn't understand why Paxos is better for split-brain scenarios or other network failures than traditional bully algorithm because I can easily find out when quorum of nodes leave from the cluster without using Raft. The only benefit I see is the count of packets that each server have to handle; only master sends heartbeat messages in Raft while in this case each node has to send heartbeat message to each other. However I don't think this is a problem since I can simply implement similar heartbeat algorithm without changing my master election algorithm.

Can someone elaborate on that?

Source: (StackOverflow)

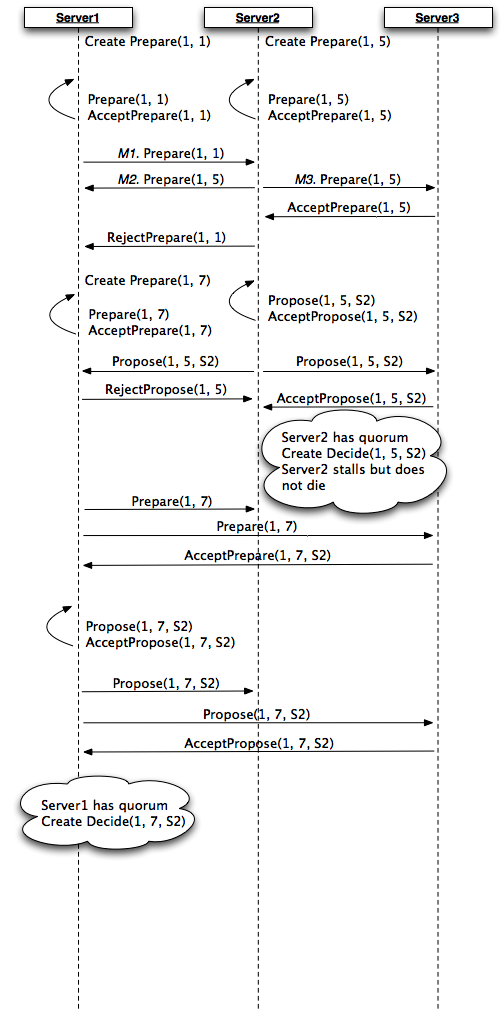

I'm looking into Paxos and I'm confused about how the algorithm should behave in this contrived example. I hope the diagram below explains the scenario.

A few points:

- Each agent acts as a proposer/acceptor/learner

- Prepare messages have form

(instance, proposal_num)

- Propose messages have form

(instance, proposal_num, proposal_val)

- Server1 and Server2 both decide to start the proposal process at the same time

- In the beginning messages M1, M2 and M3 occur simultaneously

It seems here that although the protocol is 'correct', i.e. only one value S2 is chosen, Server1 and Server2 believe that it was chosen because of different proposal numbers.

Does the Paxos algorithm only terminate when the Decide(...) message is sent out to the learners? I must be misunderstanding Paxos Made Simple but I thought the choice was made the moment the proposers achieved quorum for their Propose(...) messages.

If the choice is only made after the Decide(...) message is sent out to the agents, should Server2 terminate its sending of Decide(1, 5, S2) when it recovers because it saw a later Prepare(1, 7)?

Source: (StackOverflow)

I've tried to reason and understand if the algorithm fails in these cases but can't seem to find an example where they would.

If they don't then why isn't any of these followed?

Source: (StackOverflow)

I am trying to understand the difference between paxos and two phase commit as means to reach consensus among multiple machines. Two phase commit and three phase commit is very easy to understand. It also seems that 3PC solves the failure problem that would block in 2PC. So I don't really understand what Paxos is solving. Can anyone illuminate me about what problem does Paxos exactly solve?

Source: (StackOverflow)

The questions below are intended to be serious rather than frivolous. I lack experience in distributed systems, but I do understand how Basic Paxos works and why leader selection is useful. Unfortunately, my understanding is not deep enough to fathom the questions below.

In the paper Consensus on Transaction Commit, page 8 (page 11 of the linked PDF), we have the following statement.

Selecting a unique leader is equivalent to solving the consensus

problem.

If this statement is true, and the very purpose of Paxos to achieve consensus, why is Paxos itself not generally used for leader election?

Moreover, the same paper endorses the leader election algorithm described the Stable Leader Election paper.

If the two problems are equivalent, and the same paper endorses a different leader election algorithm, why isn't the other algorithm used for solving the general consensus problem instead of Paxos?

Source: (StackOverflow)

In Multi-Paxos algorithm, consider this message flow from the viewpoint of an acceptor:

receive: Prepare(N)

reply: Promise(N, null)

receive: Accept!(N, V1)

reply: Accepted(N, V1)

receive: Accept!(N+1, V2)

reply: ?

What should the acceptor's reaction be in this case, according to the protocol? Should it reply with Accepted(N+1, V2), or should it ignore the second Accept!?

I believe this case may happen in Multi-Paxos when a second proposer comes online and believes he is (and always was) leader, therefore he sends his Accept without Preparing. Or if his Prepare simply did not reach the acceptor. If this case may not happen, can you explain why?

Source: (StackOverflow)

In Paxos made simple Lamport describes Phase 2 (a) of the algorithm as following:

If the proposer receives a response to its prepare requests

(numbered n) from a majority of acceptors, then it sends an accept

request to each of those acceptors for a proposal numbered n with a

value v, where v is the value of the highest-numbered proposal among

the responses, or is any value if the responses reported no proposals.

- Does this mean that a proposer can send an accept request as soon as he has gathered an response from a majority of the acceptors regardless of their proposal numbers? (I find the emphasized part of the quote to imply so, because all equally-numbered proposals should have the same value, right?)

- Or does the proposer need responses with the same proposal number from the majority of the acceptors? (Meaning that responses with a number m (being less that n) don't count towards the majority for responses numbered n)

Source: (StackOverflow)